On Wednesday, Microsoft Research introduced Magma, an integrated AI foundation model that combines visual and language processing to control software interfaces and robotic systems. If the results hold up outside of Microsoft’s internal testing, it could mark a meaningful step forward for an all-purpose multimodal AI that can operate interactively in both real and digital spaces.

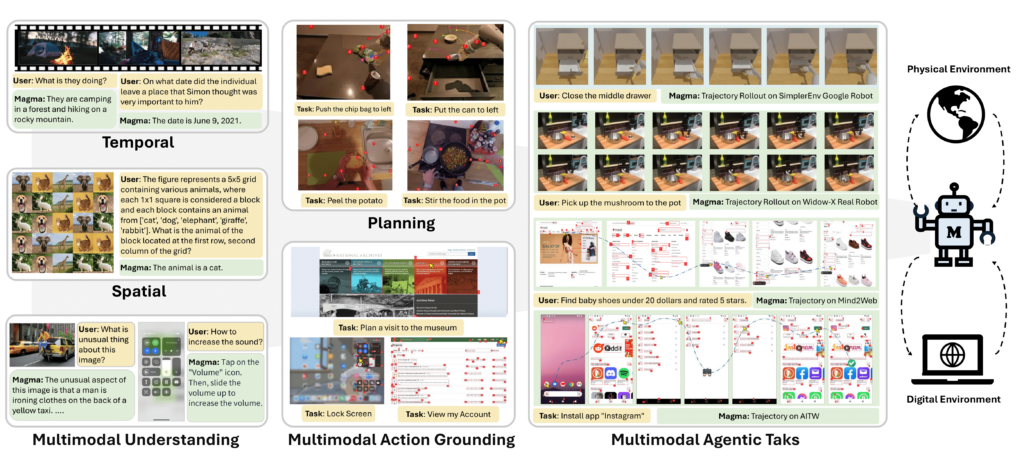

Microsoft claims that Magma is the first AI model that not only processes multimodal data (like text, images, and video) but can also natively act upon it—whether that’s navigating a user interface or manipulating physical objects. The project is a collaboration between researchers at Microsoft, KAIST, the University of Maryland, the University of Wisconsin-Madison, and the University of Washington.

We’ve seen other large language model-based robotics projects like Google’s PALM-E and RT-2 or Microsoft’s ChatGPT for Robotics that utilize LLMs for an interface. However, unlike many prior multimodal AI systems that require separate models for perception and control, Magma integrates these abilities into a single foundation model.

Microsoft is positioning Magma as a step toward agentic AI, meaning a system that can autonomously craft plans and perform multistep tasks on a human’s behalf rather than just answering questions about what it sees.

“Given a described goal,” Microsoft writes in its research paper. “Magma is able to formulate plans and execute actions to achieve it. By effectively transferring knowledge from freely available visual and language data, Magma bridges verbal, spatial, and temporal intelligence to navigate complex tasks and settings.”

Microsoft is not alone in its pursuit of agentic AI. OpenAI has been experimenting with AI agents through projects like Operator that can perform UI tasks in a web browser, and Google has explored multiple agentic projects with Gemini 2.0.

Spatial intelligence

While Magma builds off of Transformer-based LLM technology that feeds training tokens into a neural network, it’s different from traditional vision-language models (like GPT-4V, for example) by going beyond what they call “verbal intelligence” to also include “spatial intelligence” (planning and action execution). By training on a mix of images, videos, robotics data, and UI interactions, Microsoft claims that Magma is a true multimodal agent rather than just a perceptual model.

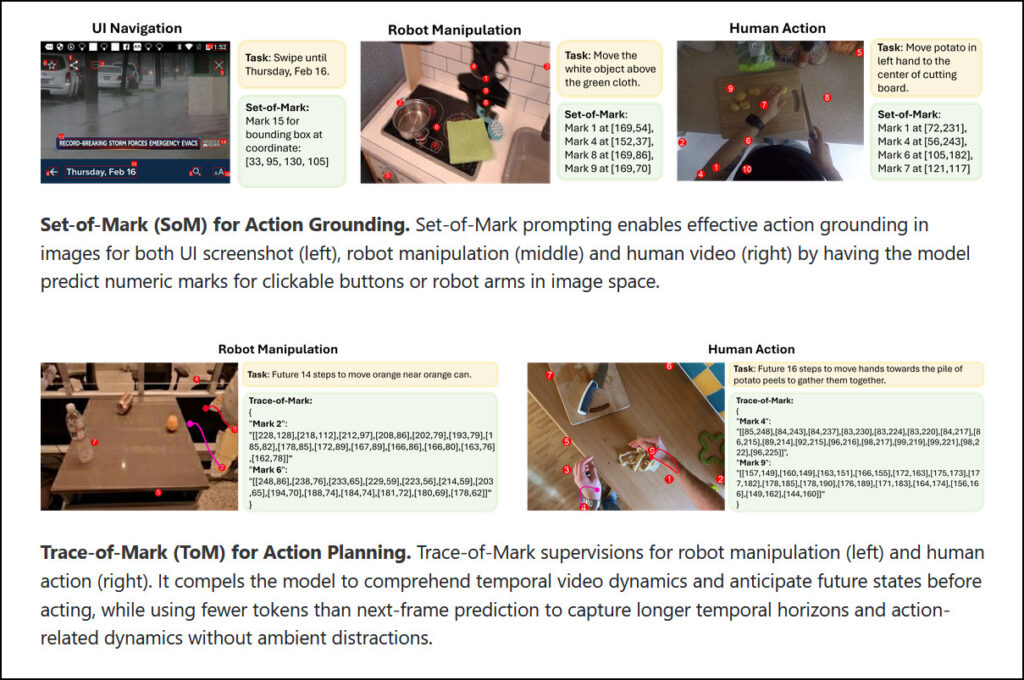

The Magma model introduces two technical components: Set-of-Mark, which identifies objects that can be manipulated in an environment by assigning numeric labels to interactive elements, such as clickable buttons in a UI or graspable objects in a robotic workspace, and Trace-of-Mark, which learns movement patterns from video data. Microsoft says those features allow the model to complete tasks like navigating user interfaces or directing robotic arms to grasp objects.

Microsoft Magma researcher Jianwei Yang wrote in a Hacker News comment that the name “Magma” stands for “M(ultimodal) Ag(entic) M(odel) at Microsoft (Rese)A(rch),” after some people noted that “Magma” already belongs to an existing matrix algebra library, which could create some confusion in technical discussions.

Reported improvements over previous models

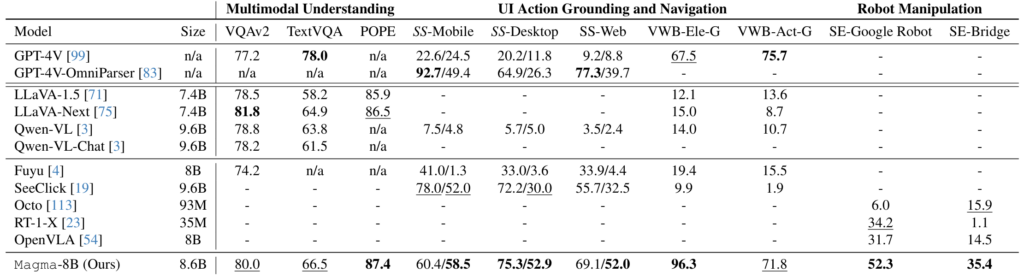

In its Magma write-up, Microsoft claims Magma-8B performs competitively across benchmarks, showing strong results in UI navigation and robot manipulation tasks.

For example, it scored 80.0 on the VQAv2 visual question-answering benchmark—higher than GPT-4V’s 77.2 but lower than LLaVA-Next’s 81.8. Its POPE score of 87.4 leads all models in the comparison. In robot manipulation, Magma reportedly outperforms OpenVLA, an open source vision-language-action model, in multiple robot manipulation tasks.

As always, we take AI benchmarks with a grain of salt since many have not been scientifically validated as being able to measure useful properties of AI models. External verification of Microsoft’s benchmark results will become possible once other researchers can access the public code release.

Like all AI models, Magma is not perfect. It still faces technical limitations in complex step-by-step decision-making that requires multiple steps over time, according to Microsoft’s documentation. The company says it continues to work on improving these capabilities through ongoing research.

Yang says Microsoft will release Magma’s training and inference code on GitHub next week, allowing external researchers to build on the work. If Magma delivers on its promise, it could push Microsoft’s AI assistants beyond limited text interactions, enabling them to operate software autonomously and execute real-world tasks through robotics.

Magma is also a sign of how quickly the culture around AI can change. Just a few years ago, this kind of agentic talk scared many people who feared it might lead to AI taking over the world. While some people still fear that outcome, in 2025, AI agents are a common topic of mainstream AI research that regularly takes place without triggering calls to pause all of AI development.