A pair of Carnegie Mellon University researchers recently discovered hints that the process of compressing information can solve complex reasoning tasks without pre-training on a large number of examples. Their system tackles some types of abstract pattern-matching tasks using only the puzzles themselves, challenging conventional wisdom about how machine learning systems acquire problem-solving abilities.

“Can lossless information compression by itself produce intelligent behavior?” ask Isaac Liao, a first-year PhD student, and his advisor Professor Albert Gu from CMU’s Machine Learning Department. Their work suggests the answer might be yes. To demonstrate, they created CompressARC and published the results in a comprehensive post on Liao’s website.

The pair tested their approach on the Abstraction and Reasoning Corpus (ARC-AGI), an unbeaten visual benchmark created in 2019 by machine learning researcher François Chollet to test AI systems’ abstract reasoning skills. ARC presents systems with grid-based image puzzles where each provides several examples demonstrating an underlying rule, and the system must infer that rule to apply it to a new example.

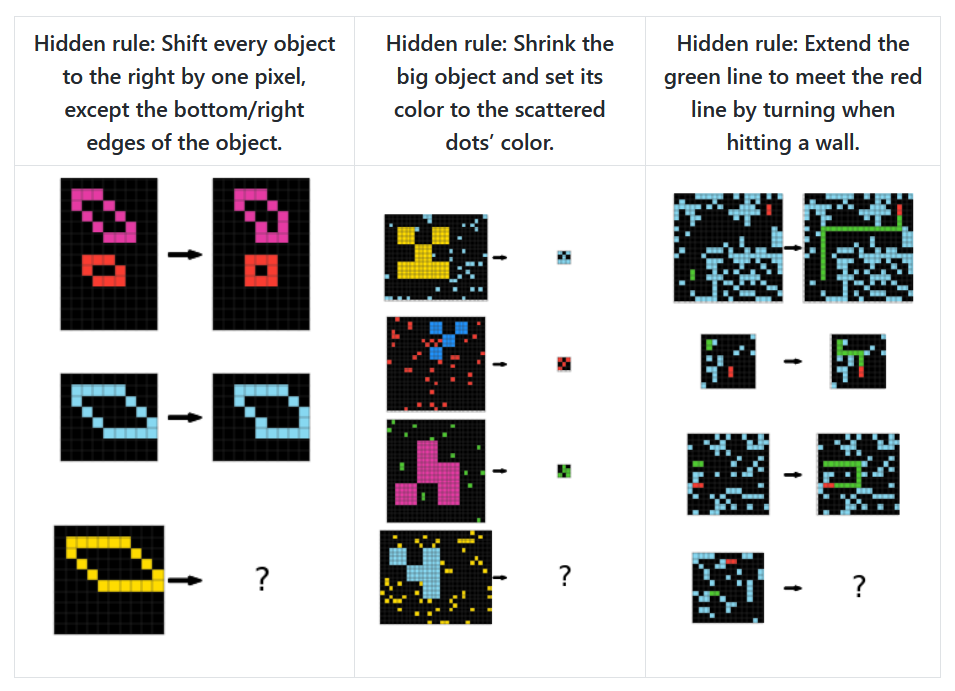

For instance, one ARC-AGI puzzle shows a grid with light blue rows and columns dividing the space into boxes. The task requires figuring out which colors belong in which boxes based on their position: black for corners, magenta for the middle, and directional colors (red for up, blue for down, green for right, and yellow for left) for the remaining boxes. Here are three other example ARC-AGI puzzles, taken from Liao’s website:

The puzzles test capabilities that some experts believe may be fundamental to general human-like reasoning (often called “AGI” for artificial general intelligence). Those properties include understanding object persistence, goal-directed behavior, counting, and basic geometry without requiring specialized knowledge. The average human solves 76.2 percent of the ARC-AGI puzzles, while human experts reach 98.5 percent.

OpenAI made waves in December for the claim that its o3 simulated reasoning model earned a record-breaking score on the ARC-AGI benchmark. In testing with computational limits, o3 scored 75.7 percent on the test, while in high-compute testing (basically unlimited thinking time), it reached 87.5 percent, which OpenAI says is comparable to human performance.

CompressARC achieves 34.75 percent accuracy on the ARC-AGI training set (the collection of puzzles used to develop the system) and 20 percent on the evaluation set (a separate group of unseen puzzles used to test how well the approach generalizes to new problems). Each puzzle takes about 20 minutes to process on a consumer-grade RTX 4070 GPU, compared to top-performing methods that use heavy-duty data center-grade machines and what the researchers describe as “astronomical amounts of compute.”

Not your typical AI approach

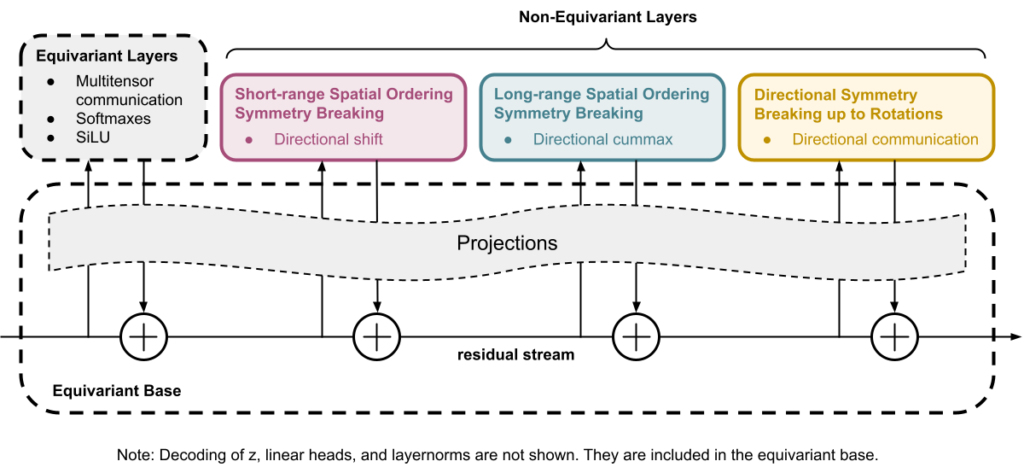

CompressARC takes a completely different approach than most current AI systems. Instead of relying on pre-training—the process where machine learning models learn from massive datasets before tackling specific tasks—it works with no external training data whatsoever. The system trains itself in real-time using only the specific puzzle it needs to solve.

“No pretraining; models are randomly initialized and trained during inference time. No dataset; one model trains on just the target ARC-AGI puzzle and outputs one answer,” the researchers write, describing their strict constraints.

When the researchers say “No search,” they’re referring to another common technique in AI problem-solving where systems try many different possible solutions and select the best one. Search algorithms work by systematically exploring options—like a chess program evaluating thousands of possible moves—rather than directly learning a solution. CompressARC avoids this trial-and-error approach, relying solely on gradient descent—a mathematical technique that incrementally adjusts the network’s parameters to reduce errors, similar to how you might find the bottom of a valley by always walking downhill.

The system’s core principle uses compression—finding the most efficient way to represent information by identifying patterns and regularities—as the driving force behind intelligence. CompressARC searches for the shortest possible description of a puzzle that can accurately reproduce the examples and the solution when unpacked.

The compression-intelligence connection

The potential connection between compression and intelligence may sound strange at first glance, but it has deep theoretical roots in computer science concepts like Kolmogorov complexity (the shortest program that produces a specified output) and Solomonoff induction—a theoretical gold standard for prediction equivalent to an optimal compression algorithm.

To compress information efficiently, a system must recognize patterns, find regularities, and “understand” the underlying structure of the data—abilities that mirror what many consider intelligent behavior. A system that can predict what comes next in a sequence can compress that sequence efficiently. As a result, some computer scientists over the decades have suggested that compression may be equivalent to general intelligence. Based on these principles, the Hutter Prize has offered awards to researchers who can compress a 1GB file to the smallest size.

We previously wrote about intelligence and compression in September 2023, when a DeepMind paper discovered that large language models can sometimes outperform specialized compression algorithms. In that study, researchers found that DeepMind’s Chinchilla 70B model could compress image patches to 43.4 percent of their original size (beating PNG’s 58.5 percent) and audio samples to just 16.4 percent (outperforming FLAC’s 30.3 percent).

That 2023 research suggested a deep connection between compression and intelligence—the idea that truly understanding patterns in data enables more efficient compression, which aligns with this new CMU research. While DeepMind demonstrated compression capabilities in an already-trained model, Liao and Gu’s work takes a different approach by showing that the compression process can generate intelligent behavior from scratch.